Our activity last time was about the basics of Scilab. We are now tasked to use it to process an image and measure its area. This, for me, is our first image processing activity. This particular module is about measuring the actual area of a building or any place by their photos.

For this activity, we use Green’s theorem which states that the area inside a curve is equal to the contour integral around it. The area A inside a curve in discrete form is derived and given by:

![]() (1)

(1)

where x_i and y_i are the pixel coordinates of a point, and x_(i+1) and y_(i+1) are the coordinates of the next point. The equation is the contour integral along the curve necessitating the points to be arranged first in increasing order based on their subtended angles before evaluating the integral.

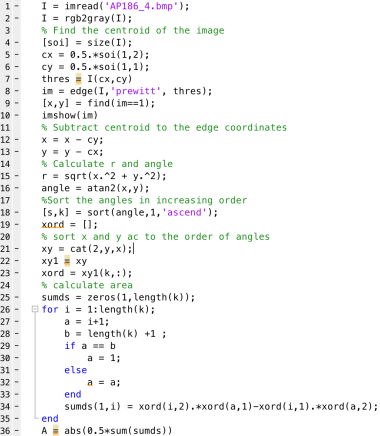

Unfortunately, I can’t use my Scilab right now right now for some reason that it stopped reading my images. For this activity, I use Matlab instead. Matlab and Scilab are practically the same. Both of them have a built-in function that detects the edges of the shapes in the image and then gives off their corresponding indices. The function is called as edge and you can set the mode for finding the edges. In my case, I use ‘prewitt’ mode. My sample image is a rectangle that has a white fill in it. It is drawn through Microsoft Paint. The imread and rgb2gary function of Matlab is used to load the image and to convert it into grayscale image. The edges of the rectangle are detected using the edge function. The result is a matrix with values-1 and 0- as elements. The indices of the 1’s mark the edges of the input shape. The input image and the result of edge detection are presented in figure 1.

Figure 1. The edge function of Matlab detects the edges of a rectangle (a) and gives off the indices of the edge points. The edge points trace the edges of the shape as shown in (b).

Figure 1. The edge function of Matlab detects the edges of a rectangle (a) and gives off the indices of the edge points. The edge points trace the edges of the shape as shown in (b).

The coordinates of the centroid of the image are subtracted from the coordinates of each edge point. Then, each edge point is ranked in increasing order based on its swept angle. This angle is calculated by getting the inverse tangent of the x and y coordinate of an edge point. The area is calculated using equation 1.

Using the algorithm in figure 2 the area of the rectangle is 52808 pixel^2 which is approximately the same as the theoretical area of 52812 pixel^2.

Figure 2. Source code from area measurement

Figure 2. Source code from area measurement

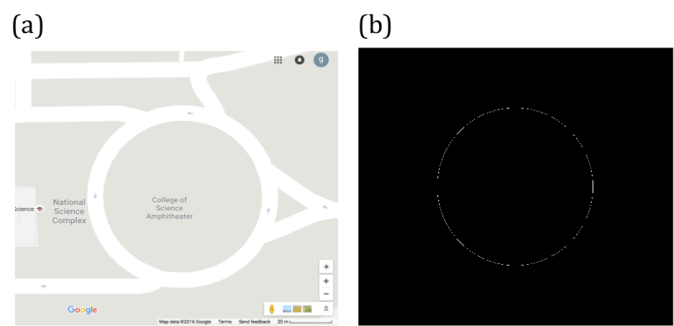

The same algorithm is used to calculate the area covered by the College of Science Amphitheater. The image is taken from Google maps. The area of interest is filled with white color and the latter parts are painted with black. The absolute scale of Google maps is used to calculate the scale factor that converts pixel area to real area. Calculated area using Matlab is 4618.1 m^2 which is slightly greater than the actual area by 0.175 % (4610 m^2). The actual area of the College of Science Amphitheater and its edges are shown in figure 3.

Figure 3. Map of CS Amphitheater (a) and its edge (b).

Figure 3. Map of CS Amphitheater (a) and its edge (b).

Area measurement is also possible by uploading and analyzing the image of a map or objects in a software named ImageJ. ImageJ is a free image processing software created by National Institutes of Health in US. One can easily use this as the software that can measure the area of interest in the image drawn with a closed curve. By just setting the right scale factor between the pixel distance and actual length between two points inside, ImageJ can calculate the actual area of the object or place.

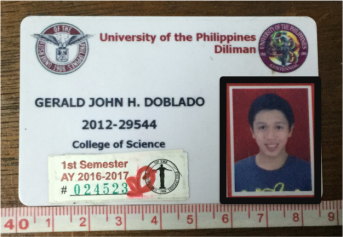

To demonstrate the process of area measurement tool of ImageJ, the area covered by an ID picture is estimated. The scale factor is set to 156.513 pixels/cm which is done by getting pixel count of a line drawn between two adjacent centimeter ticks along the measuring tape in the image. The estimated area by ImageJ is 8.688 cm^2. It deviates from the real area, which is 8.670, by 2.08 %. The object of interest is shown in figure 4.

Figure 4. The area covered by the ID picture (outlined by the black rectangle) is measured using the analyze tool of ImageJ.

Figure 4. The area covered by the ID picture (outlined by the black rectangle) is measured using the analyze tool of ImageJ.

My results are very close to the theoretical areas of the objects I have analyzed. My largest percent error is 2.08%. Therefore, I have done well in this activity. For that, I rate myself as 9.

Acknowledgement:

I would like to thank Ms. Krizzia Mañago for reviewing my work.

Reference:

[1] M. Soriano, Applied Physics 186 A4- Length and Area Estimation in Images. 2014.

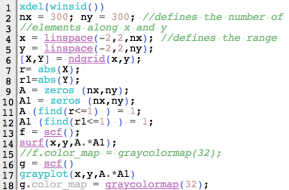

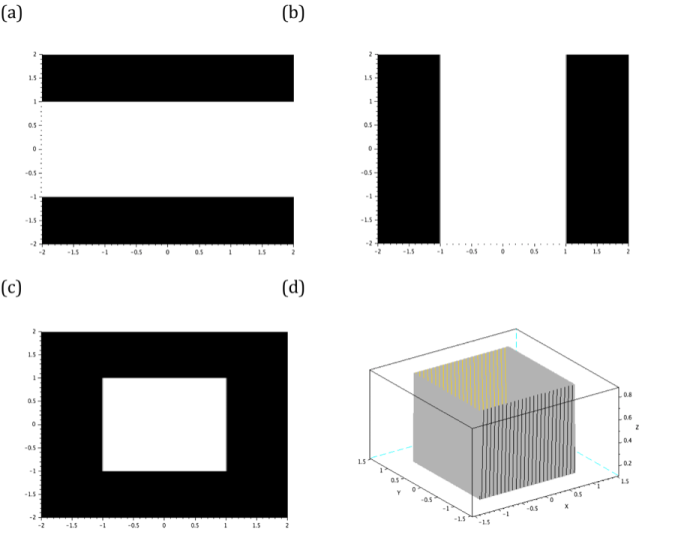

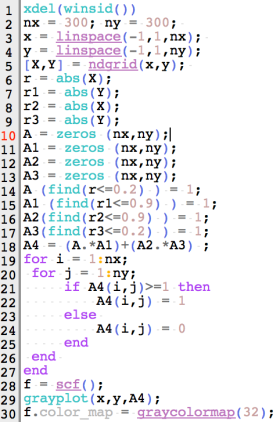

Figure 1. Code snippet for square aperture.

Figure 1. Code snippet for square aperture.

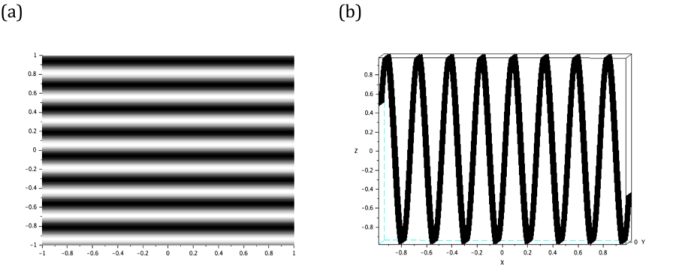

Figure 4. The siusoidal image is generated by taking the sine of Y grid. Its line scan is also given to visualize the profile of the image.

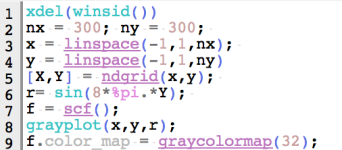

Figure 4. The siusoidal image is generated by taking the sine of Y grid. Its line scan is also given to visualize the profile of the image. Figure 3. Code snippet for sinusoid image.

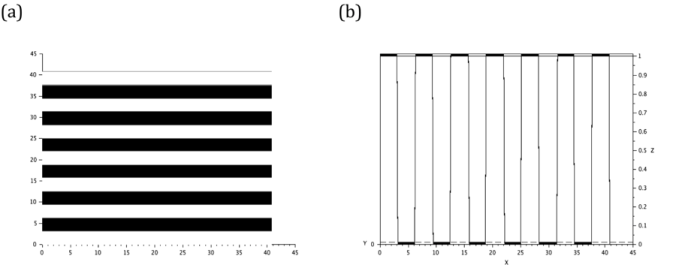

Figure 3. Code snippet for sinusoid image. Figure 5. The binarization of the sinusoid in previous selections yields a grating (a). The profile (b) of the image is also given.

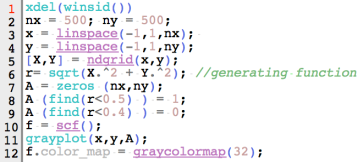

Figure 5. The binarization of the sinusoid in previous selections yields a grating (a). The profile (b) of the image is also given. Figure 6. Code snippet for annulus.

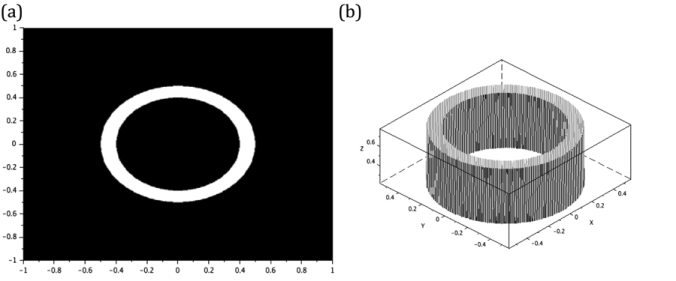

Figure 6. Code snippet for annulus. Figure 7. The annulus (a) generated has an outer and inner radii of 0.5 and 0.4 respectively. It profile is given in (b).

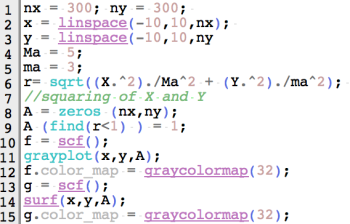

Figure 7. The annulus (a) generated has an outer and inner radii of 0.5 and 0.4 respectively. It profile is given in (b). Figure 8. Code snippet for ellipse image.

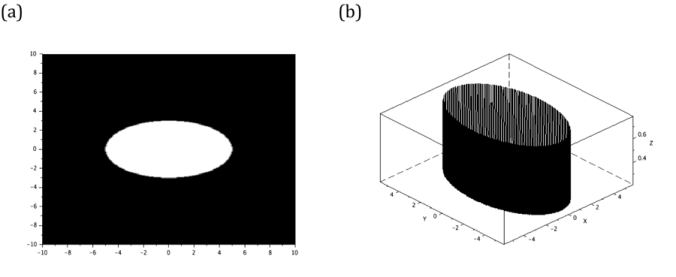

Figure 8. Code snippet for ellipse image. Figure 9. The generated ellipse (a), with major and minor axis lengths of 5 and 3 units respectively, and its profile (b) are shown here.

Figure 9. The generated ellipse (a), with major and minor axis lengths of 5 and 3 units respectively, and its profile (b) are shown here. Figure 10. Code snippet for cross

Figure 10. Code snippet for cross Figure 11. The cross (a) is generated by adding two orthogonal 1.8 x 0.4rectangles. Its profile is also given here (b).

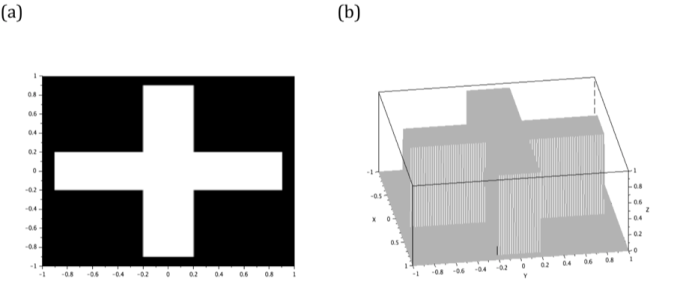

Figure 11. The cross (a) is generated by adding two orthogonal 1.8 x 0.4rectangles. Its profile is also given here (b). Figure 13. The gaussian mask (a) and its profile (b).

Figure 13. The gaussian mask (a) and its profile (b).

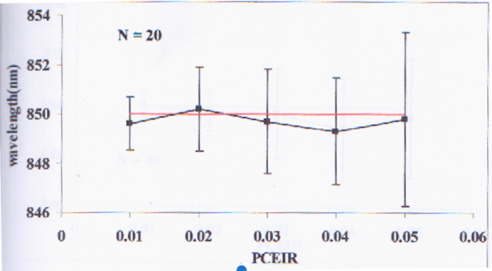

Figure 2. Superimposed image and reconstructed plot.

Figure 2. Superimposed image and reconstructed plot.